Hybrid Relightable 3D Gaussian Rendering

A downloadable project

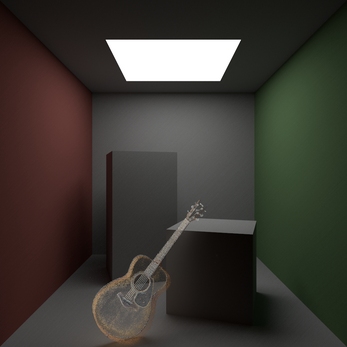

Our project solves the challenge of recreating real-world objects in 3D modeling software. Our system converts video into realistic, relightable 3D Gaussian models that can be seamlessly integrated within the Unity Game Engine.

The process begins by breaking down the input video into usable individual frames. We reconstruct the 3D objects within the scene using a computer vision algorithm called structure from motion. This algorithm finds specific points of interest within each frame, along with the camera’s orientation, to determine their relative position in space, ultimately generating a sparse point cloud representing the scene’s structure. This point cloud serves as the starting point for the 3D Gaussian model. Each point in the point cloud is replaced with a 3D Gaussian, defined by its position, shape (covariance matrix), transparency (alpha), and view-dependent color (spherical harmonics) parameters. Using stochastic gradient descent, the Gaussians are split, cloned, and have their parameters fine-tuned by comparing the rendered model against the original video frames, producing a refined 3D Gaussian model. This model can be imported into Unity like any other supported 3D file format.

As the Graphics team lead, I directed and contributed extensively to the development of our custom hybrid rendering pipeline in Unity. To achieve realistic lighting and multi-primitive support, we overrode Unity’s default render pipeline and implemented a hybrid path tracer that combines triangle-based and point-based ray tracing techniques. Our custom render pipeline is built using a series of compute shaders dispatched within a Unity command buffer at the end of the camera rendering event. The path tracer supports jittered sampling for anti-aliasing, metallic-roughness PBR BRDFs, importance sampling, and a Bounding Volume Hierarch (BVH) to accelerate ray tracing.

When rendering, we traverse each game object’s BVH in the scene, and upon reaching a leaf node, we check for intersections against all of its primitives. We use the Möller-Trumbore algorithm for ray-triangle intersections, interpolating vertex attributes using barycentric coordinates to determine the intersection value. Ray-Gaussian intersections are handled by finding the point of maximum response and then computing opacity at that point using the Gaussian function. All ray intersections are inserted into a distance-sorted list, discarding those beyond the point of minimum path transmittance. The path recursively reflects, losing energy with each material's absorption until it reaches the maximum bounce limit. This process creates realistic, physically based lighting interactions.

External Links

For more information, go to our team's website.

To view our source code, go here.

| Status | In development |

| Category | Other |

| Author | Jackson Vanderheyden |